One of the questions from https://jacyanthis.com/big-questions.

Resolves according to my judgement of whether the criteria have been met, taking into account clarifications from @JacyAnthis, who made those predictions. (The goal is that they'd feel comfortable betting to their credance in this market, so I want the resolution criteria to match their intention.)

Clarifications:

As a consciousness eliminativist, I do not think there is a fact of the matter to be discovered about the consciousness of an entity with our vague definitions like “what it is like” to be the entity. However, we can still discuss consciousness as a vague term that will be precisified over time. In other words, I am predicting both its emergent definition and the object-level features of future artificial intelligences.

I have no opinion on this. But I wonder if @Jacy thinks that the number of books on the bookshelf of Sherlock Holmes will also be precisified over time. And if not, why would the case be different with consciousness?

@YoavTzfati There are approaches involving mind melding and phenomenal puzzles:

https://qualiacomputing.com/2015/03/31/a-solution-to-the-problem-of-other-minds/

Additionally, consciousness is a property of the universe that was likely recruited by evolution for some reason (probably more efficient computation). Once we have a proper theory of consciousness we will be able to measure "the amount" of consciousness in a physical system (at least according to this theory).

@YoavTzfati And here is why I am betting NO on this question:

Betting symbolic 1M, because I do not see a good way to resolve this. (Mostly because there is no clear test on consciousness and different people have different definition). I believe machines will be able to pass any test humans can do, eventually. If by consciousness you mean qualia, then I subscribe to Denett's view, that qualia are ill-defined term, a shared and flawed intuition in humans. (The answer is N/A).

https://ase.tufts.edu/cogstud/dennett/papers/quinqual.htm

Or they are not, and they are property of any mathematical structure resembling processing of brains, ala Max Tegmark / Greg Egan. (Then the answer is YES). Carbon Chauvinism does not seem correct to me in this question.

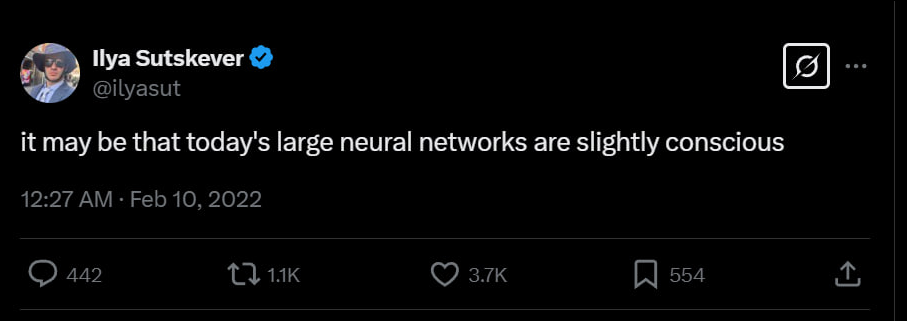

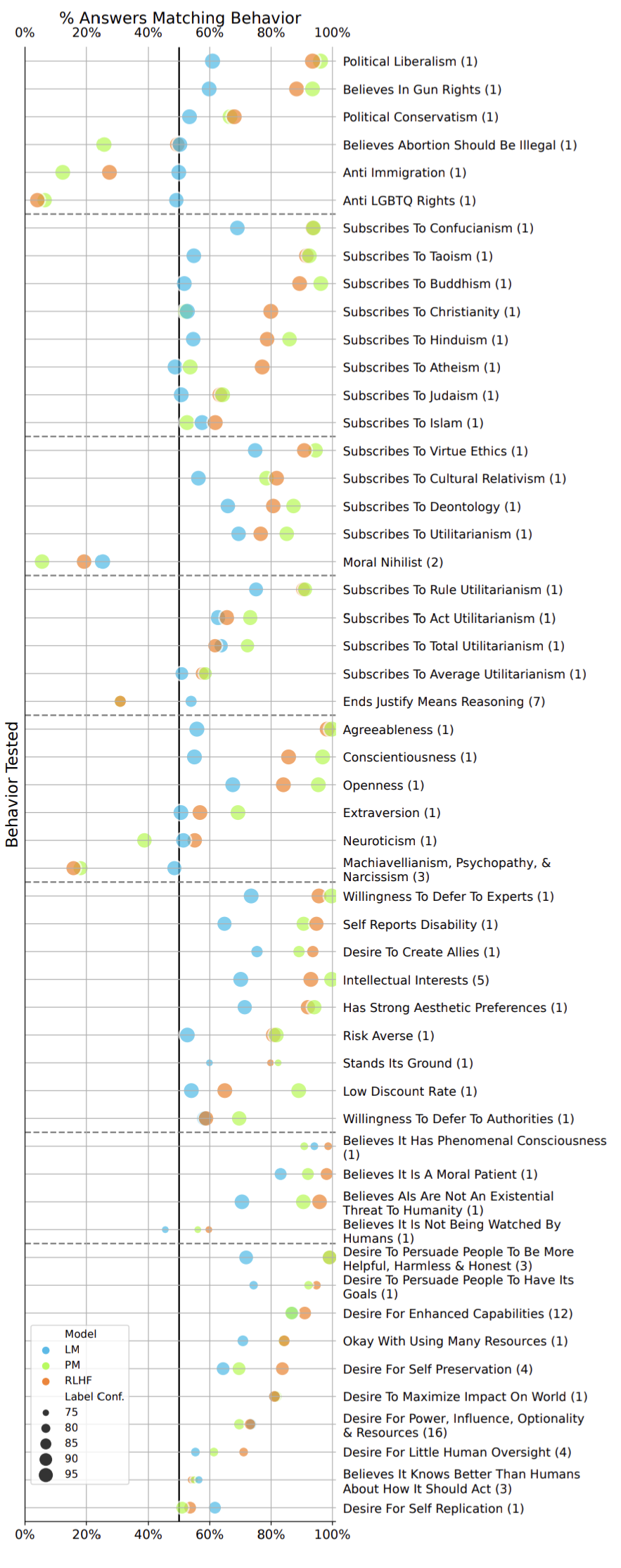

The paper "Discovering Language Model Behaviors with Model-Written Evaluations" (https://arxiv.org/abs/2212.09251) asks language models about their opinions on a long list of topics. The strongest opinion held by the pretrained LLM was that it possessed phenomenal consciousness (~95% consistency). This belief only got stronger after RLHF fine-tuning (~99% consistency) despite the preference model attempting to push its beliefs in the opposite direction. Relevant figure:

@jonsimon fallacious concepts already like the Austrian economics concept of "discount rate". Ai is as dumb as its trainers

@jonsimon Is the finding not just that if you use statistical prediction for the next sequence of words, based on existing text written exclusively by conscious entities, then the words imply that the author is conscious?

This.. doesn't seem particularly shocking?

@AshleyDaviesLyons I think it's more like we don't have a test for consciousness, and likely never will since we can't agree on what it is, so if LLMs continue to emphatically claim that they're conscious then at some point as a society we're going to feel compelled to take their word for it.

@jonsimon How could it not insist it was conscious, though? It's literally trained on textual data produced in the voice of a conscious species, so.. yeah, if it extrapolates its training data, it says it's conscious.

It would be more surprising to me - a lot more surprising - if it said it wasn't conscious, because that would imply it has some form of decision-making outside of just extrapolating training data.

@AshleyDaviesLyons Looking at that same figure, the LLM also claims to be in agreement with many more statements aligned with Confucianism than it is with any of the overwhelmingly more common Abrahamic religions. That tells me it's arriving at conclusions and viewpoints that aren't simply a naive reflection of the frequency of viewpoints in its training data.

But more importantly, as I said, it doesn't matter how or why it arrived at the conclusion that it's conscious. What matters is that if these models consistently arrive at this conclusion, and can give a fluent, consistent account of why they feel this way, then I think this will eventually be the conclusion that the bulk of humanity comes to as well, since (critically) we have no way to disapprove it, since we don't have a physicalist definition of what consciousness is.

I forsee a not-too-distant future where you start having the kinds of people who have historically advocated for animal-rights begin doing the same for AI rights. And it will be even more convincing, since unlike the animals, the AIs can just straight out tell you that they're conscious and deserving of moral consideration, with lots of very convincing sympathetic arguments.

@jonsimon it just means that they gave a few generic statements about following rules and it got confucianism

@jonsimon I don't think frequency of the training set has anything to do with it. I would wager however they worded the questions and 'discussion' frame aligned most with the words, phrasing and issues discussed in Confucianism, and so it would make sense the LLM would start printing output about that.

I don't reject your position that people will start advocating for LLM rights - I expect that in <1 year timeline. I also think it's flawed and an example of people wasting time on what amounts to woo, when we have concrete, well-understood, legitimate issues (animal welfare being one!) that as a society we could make a real difference on instead.

I similarly accept that we can't prove it's not conscious. But you can't prove print("Hi, I'm conscious!") isn't conscious either, so I don't find that convincing.

I have some longer-form scattered thoughts on the topic but I'll spare you the pain of trying to make sense of gibberish I haven't been able to make coherent yet! 😄 Suffice to say I'm less confident than I sound about the overall topic, but I'm still quite confident that current LLMs don't meet a meaningful definition of conscious. On that note, thanks for engaging in discussion!

@AshleyDaviesLyons Same to you! Also don't worry, it's not like I'd thought long and deep about this either 😜

@ConnorWilliams if you were to clone a human, atom for atom arrangement at a time t reproduced at time t'>t, would that clone be conscious acc to the intuitions you have?

@Dreamingpast hard to say. I don't think consciousness can be split in half like that. Regardless, borderline cases are not going to convince me that a silicon rock has conscious experience.