In "Situational Awareness: The Decade Ahead", Leopold Aschenbrenner claims:

Another way of thinking about it is that given inference fleets

in 2027, we should be able to generate an entire internet’s worth of

tokens, every single day.

Resolves YES if by the end of 2027, there is enough deployed inference capacity to generate 30 trillion tokens in a 24-hour period using a combination of frontier models. "Frontier models" in the sense that GPT-4 is a frontier model today in mid-2024.

This is one of a series of markets on claims made in Leopold Aschenbrenner's Situational Awareness report(s):

Other markets about Leopold's predictions:

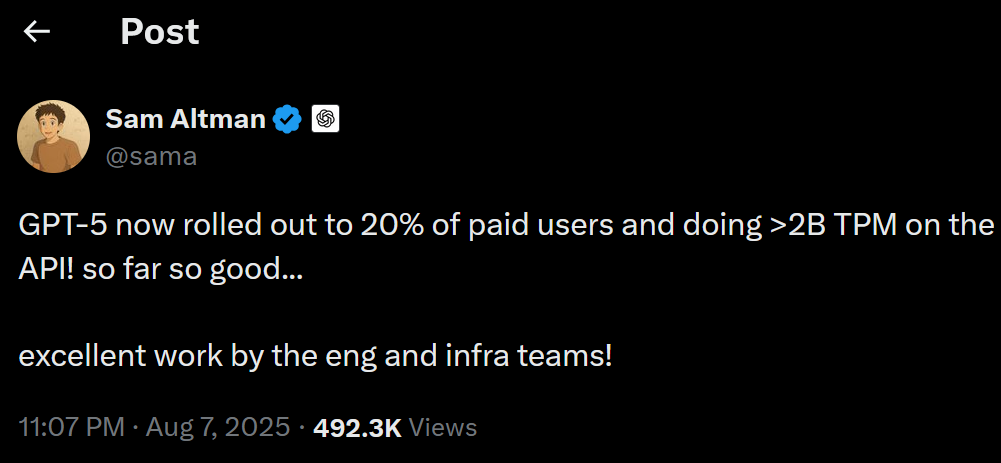

@Tossup Resolves as YES. Google alone was serving 30T tokens per day during June, see https://x.com/demishassabis/status/1948579654790774931

Theoretically they could be serving legacy model tokens, but those don't generate many tokens to begin with. And Google is not alone, there's also OpenAI serving even more tokens than Google.