Please answer as you would for the question "will AI wipe out humanity before the year 2100".

If your answer is "no", choose "will not cause extinction"

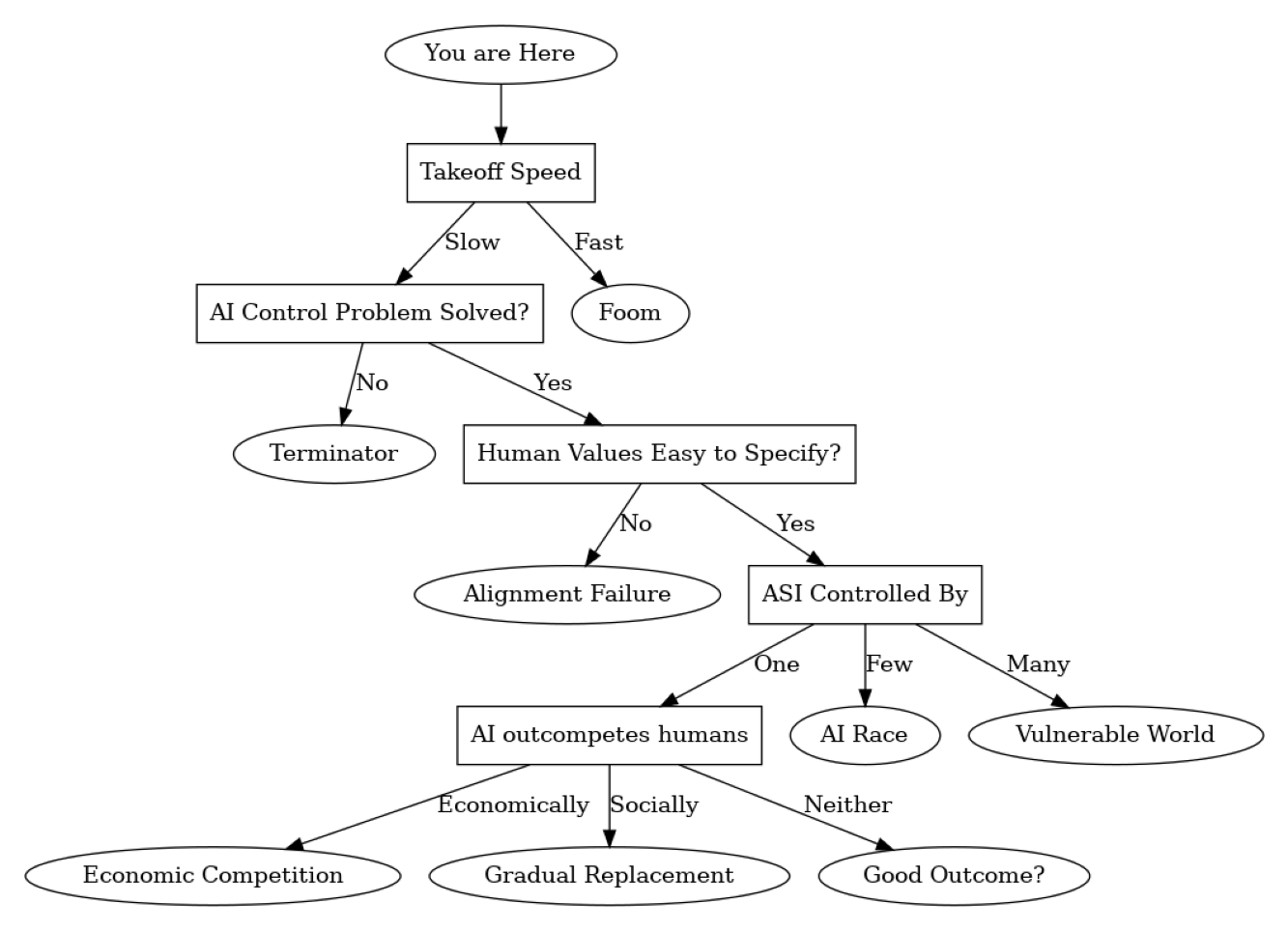

If your answer is "yes", please try to identify which of the following scenarios will be the "main" cause for human extinction:

Foom: rapidly self-improving AI destroys humanity as a result of pursuing some misaligned goal

Terminator: AI blatantly disregards human instructions and pursues its own goals.

Alignment Failure: AI appears superficially good but causes extinction due to a subtly mis-stated goal.

AI Race: A race between two competing AI labs or countries to "control the future light cone" leads to the extinction of humanity

Vulnerable World Hypothesis: AI allows the creation of new Weapons of Mass Destruction which are used by an individual (or small group) to destroy the world.

Economic Competition: AI takes all human jobs and property causing humans to die out

Gradual Replacement: AI and humans merge into a post-human species that replaces humanity causing human extinction (however you define that)

Other: substantially different in some way from the above (please comment)

Because of the nature of the question, it is unlikely that you will live to collect your winnings. Please bet in the spirit of accuracy and not with the intention to confuse or misinform.

PS. this is the order I will resolve answers in. So if AI is both misaligned and fooms, I will resolve in favor of foom.

https://manifold.markets/jgould1090Gould/how-many-times-before-january-1st-w?r=amdvdWxkMTA5MEdvdWxk

Hi, there is a market that might interest you.

@Undox There’s always the chance, we’re still around AND humans are extinct

We’d just need not human anymore

This is a market where meta-level reasons make there be only one sensible bet.

If humans, and Manifold, are still around, the answer will have been "no", and anyone who bet on no will have more mana. If humans are not still around, the market and its resolution won't matter.

Markets that resolve before extinction can potentially get people to bet accurately, but then it's hard to have reasonable resolution criteria that make the market accurately reflect reality.

You could also do what Eliezer did with his 2030 market, where it resolves N/A at some future point in time, which encourages people to bet their beliefs in order to be able to invest their profits in the meantime.

@harfe I didn't realize manifold adds its own "other' category. Any new options added will go into (manifold's) other. Please ignore "will cause extinction, other"