The close date will be extended until an AI model achieves a performance equal to or greater than 80%, on either FrontierMath by EpochAI (https://epoch.ai/frontiermath), or Humanity's Last Exam by safe.ai (https://lastexam.ai/).

Resolution source for the Last Exam:

This resolution will use https://scale.com/leaderboard/humanitys_last_exam as its source, if it remains up to date at the end of 2025. Otherwise, I will use my discretion in determining whether a result should be considered valid. Obvious cheating would not be considered valid.

Resolution source for FrontierMath:

EpochAI statements/information on their website.

See also:

/Manifold/what-will-be-the-best-performance-o-nzPCsqZgPc

/Bayesian/what-will-be-the-best-ai-performanc

/Bayesian/will-o3s-score-on-the-last-exam-be

/MatthewBarnett/will-an-ai-achieve-85-performance-o--cash

/Bayesian/will-an-ai-achieve-85-performance-o-hyPtIE98qZ

They are creating a new version of the dataset now, in response to the criticisms

@RyanGreenblatt Why do you think this? Can you give any specifics about what kind of impossible we're looking at?

@RyanGreenblatt that sounds like a plausible estimate to me but imo corrections should be allowed to the dataset for the purposes of this market, but not ~additions that substantially change it. At the very least for FromtierMath you have to account for the addition of some future problems some they are still planning on adding some to the completed dataset iirc.

@RyanGreenblatt any reason to think so? These datasets are not old-style CIFAR with mislabels and careless samples

@mathvc They are expert-level questions selected for being failed by current llms. If by hypothetical the llms are expert level already, it’ll filter out all correct question-answer pair and keep the mislabeled questions, i think is the idea? If they weren’t sufficiently verified a high error rate makes sense

@Bayesian They just introduced a Bug Bounty program, which might affect your prediction.

https://scale.com/blog/humanitys-last-exam-results

I've heard from people who have spot checked the publicly released problems that a very large fraction are incorrect or impossible.

@Bayesian Assuming the resolution source you provided accepts corrections to problems, will you count it

@copiumarc it’s a long story but it’s related to the switch to market orders now converting to limit orders, they’re using an aggressive Acc to implicitly provide liquidity (it’s a bad system, and many more details on the discord)

@Ziddletwix how do i report something to manifolf

i just tested and it fills your limit orders too, this is borderline mana exploit

@copiumarc so basically any sort of reporting is best done on the discord, whether it's reporting a bug or giving feedback on a feature or anything, that's where staff would see it.

that being said, not sure there'd be much to report for acc filling too many limit orders, i think that's kinda the explicit plan atm, and it's a mess (i do not think that is a good way to provide liquidity on the site)

OpenAI's new Deep Research model got 26.6% on HLE. However, it was allowed to browse the internet. I'm not sure if that's supposed to count according to the benchmark leaderboard. regardless, i'll stick with what the benchmark leaderboard counts.

https://scale.com/leaderboard/humanitys_last_exam

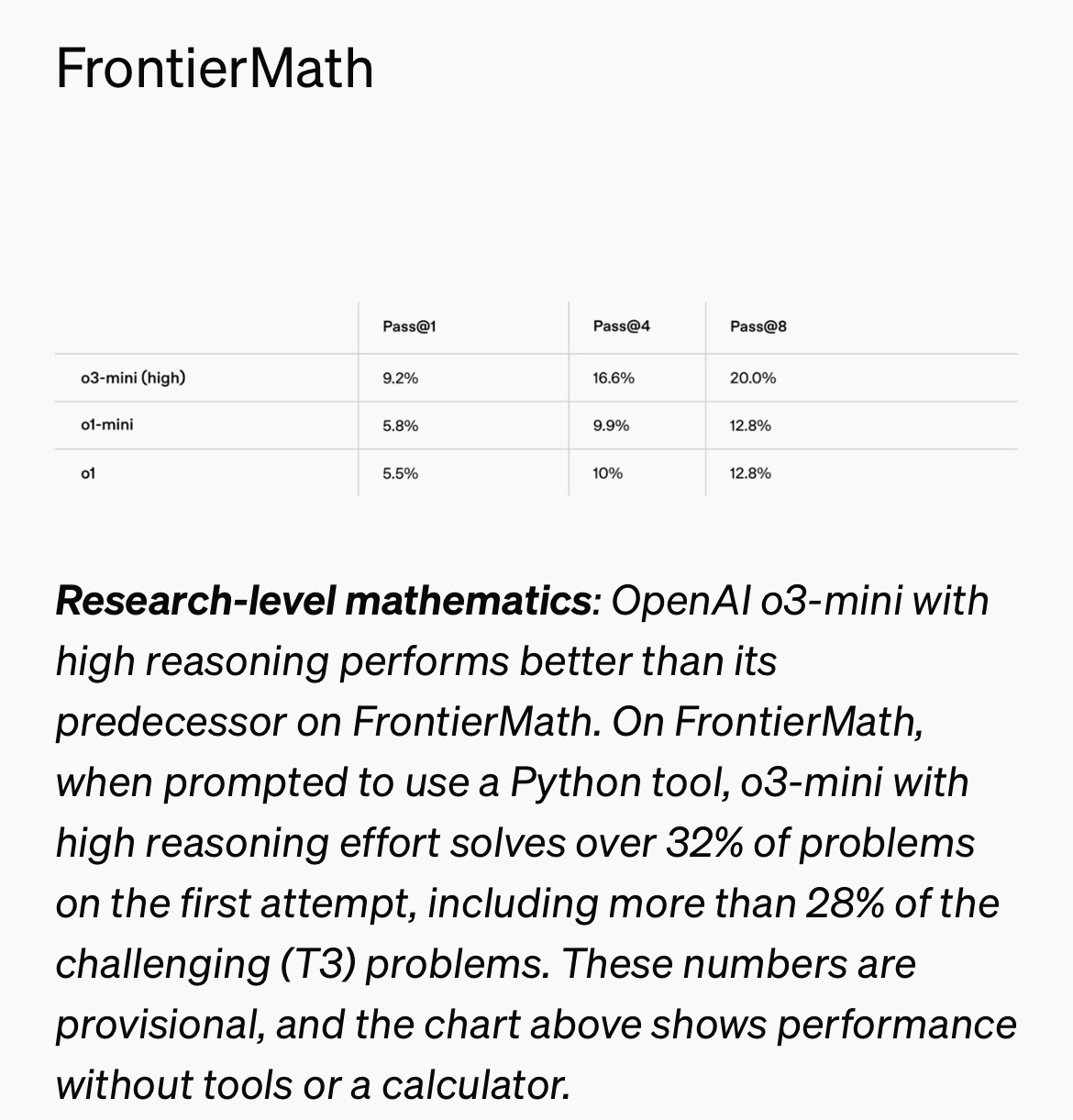

o3-mini scored ~30% on FrontierMath and 12% on HLE.

This is misleading because the FrontierMath has a very easy part (~20% undergrad level) as well as very difficult part (~20% frontier research level questions).

I expect AI models to have extremely hard time getting above 60-70% on FrontierMath, but i will be happy to be proven wrong

@mathvc the problems in the FrontierMath benchmark are split into three difficulty categories: T1, T2, and T3. With T3 being the hardest. IIRC 25% of problems are T1, 50% are T2, and 25% are T3.

28% figure is from here: https://openai.com/index/openai-o3-mini/

@jim to be honest I don’t know where openAI got T1, T2, T3. Because paper about this benchmark talks about 5 levels of difficulty and never uses the notation T3.

What is also interesting is we have no clue which model got 32%. o3-mini-high scores way below that (9%)

@mathvc yeah i remember reading that when the first markets came up on the benchmark. But since then the people who made the benchmark have talked about the tiers on twitter, so they're real. Also, OpenAI funded the benchmark and worked with Epoch to create it, so they should know what they're talking about

@Bayesian I would agree with that actually. Think both are set high enough that significant events within the Trump term and AI breaking into the public sphere both come first, which make this very hard to have high confidence on.

next up, inverse copium arc strategy