This market is taken from a comment by @L. I assume by "software side scaling" they mean something like "discontinuous progress in algorithmic efficiency", and that is what I will use to resolve this market.

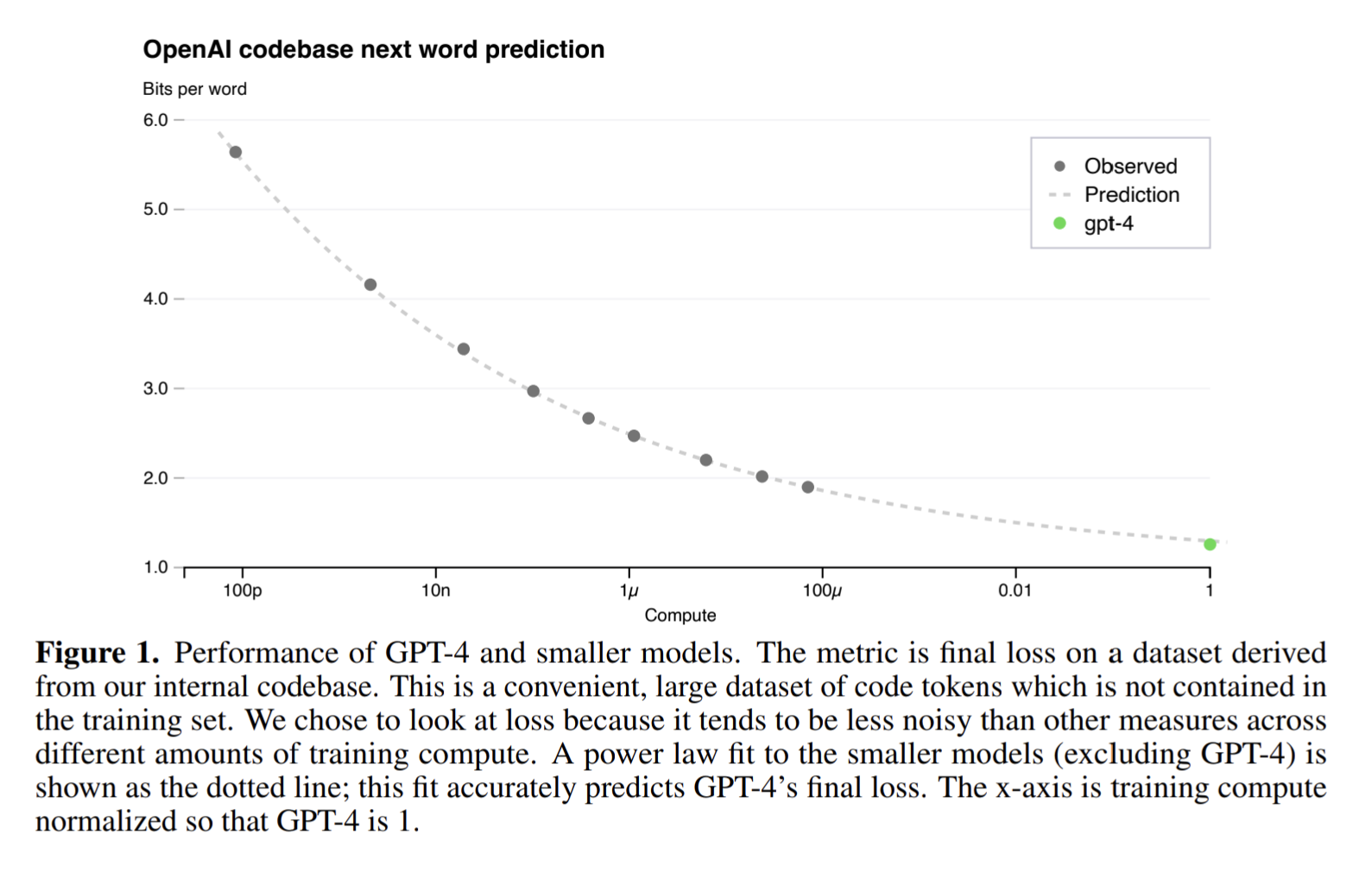

See this paper for an explanation of algorithmic efficiency: https://arxiv.org/abs/2005.04305. tldr: the efficiency of an algorithm is the number of FLOPs using that algorithm required to achieve a given target.

Current estimates are that algorithmic efficiency doubles every ~2 years. This market resolves YES if there is a 10x algorithmic efficiency gain within any six month period before 2025, for a SOTA model in a major area of ML research (RL, sequence generation, translation, etc)

It must be a SOTA model on a reasonably broad benchmark - meaning it takes 1/10 the FLOPS to achieve SOTA performance at the end of the six month period, and it can't be, for instance, performance on a single Atari environment or even a single small language benchmark.

In short: it needs to be such a significant jump that no one can reasonably argue that there wasn't a massive jump, and it needs to be on something people actually care about.

I am using "algorithmic efficiency" rather than "increase in SOTA" because it's harder to define "discontinuity" across final performance. "2x increase" is perfectly reasonable in RL but nonsensical for a classification task where SOTA is already 90%.

I was already thinking that o1 probably resolves this YES, but I also think DeepSeek-R1 resolves this. R1 was only published in 2025, but it existed in 2024. I'm inclined to resolve this market YES as a result, but given how handwavy a lot of price estimates actually are I don't think it's super clear cut. Does anyone have arguments for/against?

This has happened with o1? This is a new algorithm that reaches levels of performance that would have required orders of magnitude more compute with previous methods. This constitutes an algorithmic discontinuity before 2025 as the inference-time overhang opens up.

@VincentLuczkow this has happened

https://x.com/gdb/status/1870176891828875658

This question resolves YES

@MalachiteEagle I'm afraid I don't think they do. Please read the market carefully: it specifies a reduction in cost for the same performance as the resolution criteria. We have very little idea how much o1 cost to train, I would be kind of surprised if it was cheaper at all, and we certainly don't know if o1 trained on 1/10 of, say, claude3.5's budget would let it achieve the same results.

I do think that o1 and o3 arguably meet @L's original prediction, which didn't specify a cost criteria. If I had a separate market that resolved based on my personal vibes of whether there was a discontinuity I would resolve that yes, but this market has much more specific criteria.

@vluzko it seems pretty clear that there is a significant reduction in cost relative to the cost it would have required to train a gpt-style model to reach the same level of performance as the o1/o3 models. But yes, fair enough we don't yet have concrete numbers for the compute used to train these, and we don't have an apples-to-apples comparison reaching the same level of performance as older models.

All that being said I think it's pretty evident the essence of this question has taken place. That there has been a software-side innovation leading to a sudden discontinuous jump in performance at the same compute cost. We just don't have access to the internal experiment results that OpenAI has.

@MalachiteEagle Yeah good point I do think it's pretty plausible that a trad model trained to o1's performance would be 10x the cost. I think that means this market needs to stay unresolved until we actually have such a model.

And yeah agreed the essence of the question has taken place. Which I was not expecting to happen, I made this market because I thought @L was totally wrong.

It seems plausible to me that OAI/Anthropic have amassed far more high quality data (transcripts, contractor, textbooks etc.) than they had previously and that by training on such data in addition to other algorithmic improvement they'd get a 10x efficiency gain.

I wouldn't consider this an 'algorithmic' gain, but would like @vluzko to confirm this.

Another edge case is what if GPT-5 comes out, and then within 6 months a distilled model comes out e.g. GPT-5-Turbo which cost 10x less compute to train and achieves equivalent performance? Presumably this should not count since the previous GPT-5's compute was necessary.

I find this question in principle very interesting, but these edge cases plus the absence of public information means that the resolution of this question seems noise dominated.

I will not count a gain purely from better data, but I would accept models that don't train on the exact same dataset (so there's room for improvements from data to sneak into the resolution) that are more than 10x better (e.g. if Model A has a curated dataset and is 20x more efficient than Model B, I will probably count that because I don't expect data alone to go from 10x to 20x). This is ambiguous and subjective, of course - if people think I should resolve that scenario N/A instead I'm okay doing that.

The initial cost of a distilled model will be counted.

Does Alpaca's new fine-tuning based approach count? https://crfm.stanford.edu/2023/03/13/alpaca.html

@jonsimon This isn't SOTA and obviously the algorithmic cost of a model includes the cost of any pre training.

@vluzko Ok so that's a "no" then, since the teacher model still needs to be expensively pretrained. Thanks!

What sorts of speedups count here? Would an 10x inference time speedup from something like CALM resolve to yes?

What about a new model architecture that achieves a 10x speedup, but is only useful for a single special-purpose task, like image-segmentation?

What about a massive model where a ton of priors are backed into it upfront so that it's very fast to train, but is so slow at inference time that it's impractical to use for anything in the real world?

@jonsimon I feel that the description already answers these questions.

Inference time speed up: no, algorithmic efficiency is a measure of training time, this is in the abstract of the linked paper.

Single special purpose task: no, the description states a reasonably broad benchmark in a major area of research.

Slow at inference: this is fine, inference time is not included in the measure. All that matters is reducing training time FLOPs.

@vluzko Thank you for clarifying. In that case this market seems way too biased towards yes. Why expect a smooth (exponential) trend that's held for a decade to suddenly go discontinuous?

@jonsimon Do you mean biased towards no? It resolves yes if the trend breaks.

To the more general point: a market about an extreme outcome is biased in some sense but not in a way that matters to me. My goal in asking this question isn't to be "fair" to both yes and no outcomes - it's to get information about this particular outcome. Modifying the criteria to make it more likely to resolve yes would make the market worse at that. I do generally try to have many questions covering different levels of extreme outcome, and I will add more algorithmic efficiency questions in the future, but this question is in fact asking the thing I want it to ask.

@vluzko This wasn't a criticism about how you constructed your market, it was a comment in how other market participants have behaved thus far.

If I'm understanding the linked paper correctly, algorithmic efficiency has increased at a steady exponential rate for a decade. So the sort of discontinuity this market is asking about is basically implying that the trend goes dramatically super-exponential, at least briefly. That seems... really unlikely a priori? Like, why would anyone expect that to happen specifically within the next two years when it hasn't happened in the prior 10?

in other words: I don't think I want to speed up the possibility I see by talking about it. It's obvious enough if you're the type to think of such things, and the real question in my head is whether anyone who knows what they're doing will figure out how to get a combination of ideas working that makes it actually run. the thing I'd really like to express here is, y'all, please be aware that things are likely to scale suddenly.

Also, for what it's worth, the effect I'm expecting would move somewhat sigmoidally, and would be a multiplicative factor on algorithm capabilities. I don't expect it to max out at "magically perfect intelligent system" the way the trad miri viewpoint does; I don't think this is a recursive self improvement magic button or anything like that, if anything I think even after this improvement AI will still not be smart enough to reliably come up with new ideas on the level needed to make this kind of improvement in the first place.

But yeah, I expect something specific in how training works to change in ways that drastically speed up learning.

@L this isn't something as simple as "only do training updates on data that the model is uncertain about" or something silly like that, right? If it's something more technically substantial then yeah, I'm with @vluzko , write it down and hash it, with the hash to be decrypted if/when said breakthrough happens.