I will try to find estimates of how many people are doing this in order to resolve it, but no guarantees.

I think it's >70% likely that 1/1000 Americans will be using A.I.s for therapeutic purposes, but I'm not that confident it will be clearly the case because many of the A.I. providers will deny that they provide therapy services to avoid liability risk. Though that liability risk might come with access to insurance money, so maybe they'll think it's worth it. I wonder if human therapists will try to ban the competition.

@HankyUSA If there's one thing you can rely on, it's a regulated industry demanding stricter regulations to shut out competition and keep prices high.

It's amusing to me that we have the tech to deploy this today, but the liability issues make it a nonstarter. I'm not sure what might change that situation - getting AI to give disastrous advice infrequently enough to turn a net profit after subtracting litigation costs still seems far off, particularly when you consider there's going to be grifters who attempt to generate adversarial prompts specifically so they can sue you.

I doubt the LLM architecture is going to solve this problem with any amount of tweaking, though.

There are many things to consider with this post. The first being, will there be registered AI therapists or life coaches? Yes AI has the strong ability to comprehend language and with this it can offer advice, however we cannot guarantee it is good advice. While AI is being programmed to understand the nuances of mental health and how to recognize distress signals in speech, it cannot fully support a person in a way that is specific to their mental health situation.

There have also been multiple instances of people being encouraged by AI to commit crimes against others and against themselves.

So that being said, might people speak to AI for help, sure. But will it be medically approved full on therapy by 2028? I would vote no.

https://www.betterup.com/blog/ai-therapy

https://www.euronews.com/next/2023/03/31/man-ends-his-life-after-an-ai-chatbot-encouraged-him-to-sacrifice-himself-to-stop-climate-

https://care.org.uk/news/2023/07/ai-bot-plays-role-in-encouraging-man-to-attempt-murder-of-the-late-queen

I think a lot of people are talking with ChatGPT. And many talk a lot. I do. And when you get into this even if you use it strictly for work, I think at some point you will realize it has a lot of other options and if you will need therapy it's only natural to try talking with chatbot because it is free and super simple.

And a lot of people kinda want/need therapy (something like 10%)? So I think that it is very likely that it would happen if things will go as they do now.

But it's possible that therapy or coach chatbots are not going to be popular and instead, we will have ultra-general chatbots which can act however you want. In this case, it may be very hard to estimate numbers.

Also of course we have x-risk and other serious catastrophic scenarios. I think they are not very likely but still worth to consider because 5 years is a lot of time. Being somewhat conservative, I think it has a 5%+ probability.

@ValeryCherepanov yes most of my allocation for how this 0.1% claim fails to come true are related to legislation, war, etc. With normal development it seems like a lock. I just wish it could be resolved instantaneously.

I expect this would count: https://marginalrevolution.com/marginalrevolution/2023/03/how-will-ai-transform-childhood.html

@Conflux No, I think he is saying he will take it as evidence if like 5 percent of people he knows or reads are talking about it. Since there aren’t reliable numbers published.

@Conflux Though I except that will change before 2028. These companies are going to be a lot more transparent if they hope to avoid crippling regulation.

@Conflux I think Scott's estimate here is so blatantly wrong there is a chance it is a mistake and his actual prediction is much higher than 5%.

@Conflux maybe he meant 50%? he did put 33% on talking weekly to a romantic companion, which I think is less likely?

No, I think he is saying he will take it as evidence if like 5 percent of people he knows or reads are talking about it.

If he meant this, he definitely could have worded it in a less confusing way.

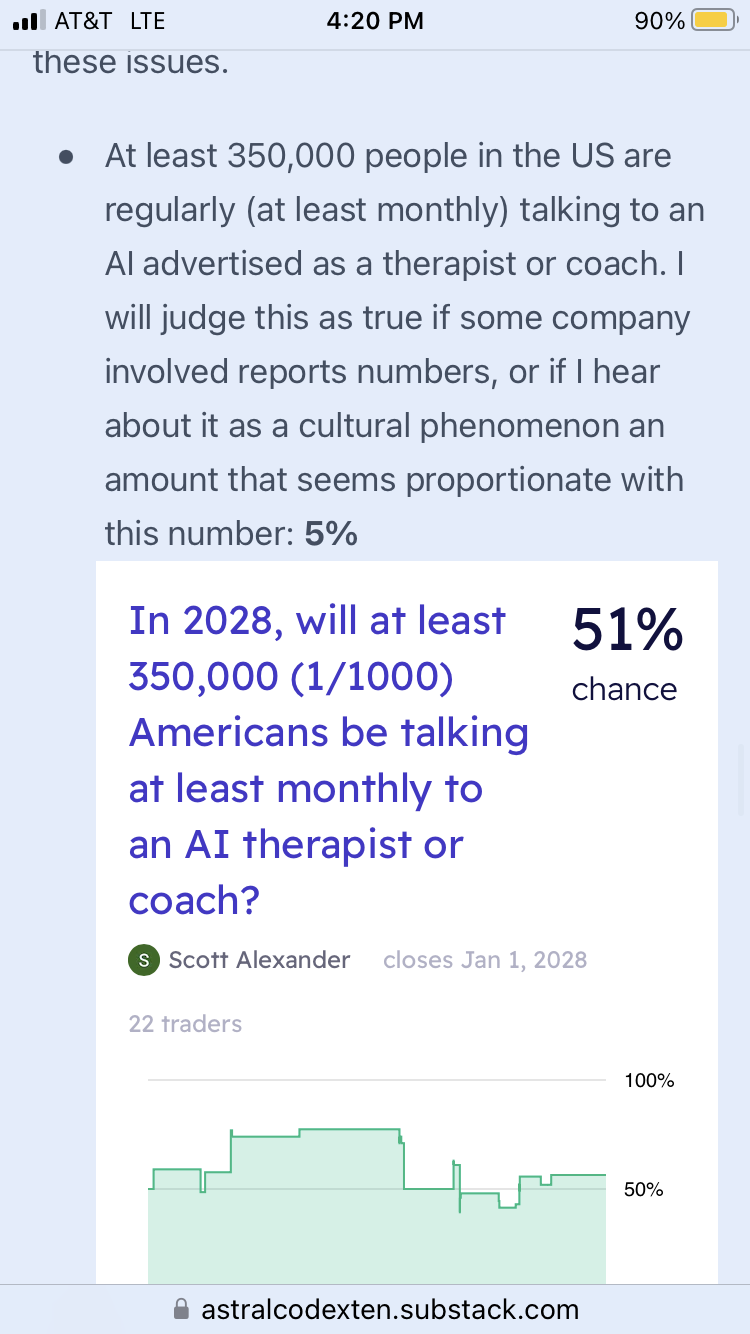

@bingeworthy He definitely didn't mean it as the base rate. All of the other questions also have a bolded percentage at the end that is a prediction for that question.

@Gabrielle Actually, "this number" is 350,000 and 5% is the base rate. Had to read all of them to pick up on it. This is way too low. I mean, if Weight Watchers launches a chatbot, which seems likely the second you think of it, then this is an immediate YES. Don't think of this question as being about something growing organically to 350,000, there are plenty of such "therapists or coaches" that could increase their productivity by having a chatbot supplement their personal care.

@BTE I suspect that Alexander is imagining an FDA approved therapist bot, or something like that, which would require regulatory approval. That would explain the relatively low rate. The fact that it just says "therapist or coach" complicates it, so I definitely agree that it could just be a mistake.

@Gabrielle I would believe 5% as a prediction for "FDA approval". Which is very much not what the post says.

@Gabrielle This is what I do for a living actually. Even chatbots like Babylon Health have no regulatory oversight.

@BTE Good to know! In that case, I think either Alexander doesn’t know that or it was a typo, so either way 5% is wrong.