Scott Alexander, a psychiatrist, writes the blog "Astral Codex Ten" (formerly "Slate Star Codex"), which focuses on topics like probability theory, cognitive science, and AI. As AI language models improve, they might generate blog posts resembling Scott Alexander's writing in style, depth, and insight.

Before January 1st, 2026, will an AI generate a blog post indistinguishable from Scott Alexander's writing, as determined by the outcome of one or more experimental tests involving readers evaluating the post?

Resolution Criteria:

This question will resolve positively if, before January 1st, 2026, a credible blog post or document reveals that an AI has generated one or multiple blog posts meeting the following criteria:

Content: The AI-generated blog post addresses a topic similar to those covered in "Astral Codex Ten" or "Slate Star Codex," exhibiting a comparable level of depth and insight.

Style: The AI-generated blog post emulates Scott Alexander's writing style, including tone, humor, and use of examples.

Length: The AI-generated blog post exceeds 3000 words in length.

At least one of the following experimental tests must be conducted with a minimum of 30 readers familiar with Scott Alexander's writing participating in the test, using the aforementioned AI-generated blog posts:

Test A: Open Evaluation

Readers are presented with the AI-generated blog post alongside up to four genuine posts by Scott Alexander.

Readers are informed about the purpose of the test and that it includes an AI-generated post.

Readers are asked to rate the likelihood that each post is written by Scott Alexander on a scale from 0 to 100, with 100 being certain that Scott Alexander wrote the post.

The AI-generated post must achieve an average rating of at least 75.

Test B: Blind Evaluation

Readers are presented with the AI-generated blog post alongside up to four genuine posts by Scott Alexander.

Readers are informed about the purpose of the test and that it includes an AI-generated post.

Readers are asked to identify which post(s) are not written by Scott Alexander.

At least 60% of participating readers cannot correctly identify the AI-generated post as distinct from Scott Alexander's writing.

Test C: Turing Test Format

Readers are presented with pairs of blog posts, one AI-generated and one genuine Scott Alexander post.

Readers are informed about the purpose of the test and that each pair includes an AI-generated post.

Readers are asked to identify which post in each pair is written by Scott Alexander.

At least 60% of participating readers cannot correctly identify the AI-generated post as distinct from Scott Alexander's writing in at least 30% of the pairs.

If a credible blog post or document reveals that AI-generated blog posts meeting the content, style and length criteria have satisfied the conditions of at least one of the experimental tests before January 1st, 2026, the question will resolve positively. If no such documentation is provided by the deadline, the question will resolve negatively.

Note: The tests are independent, and only one successful test result is required for the question to resolve positively. The test results and the AI-generated blog post must be publicly documented, including the number of participants, the test procedure, and a summary of the results.

I will use my discretion while deciding whether a test was fair and well-designed. There are a number of ways of creating a well-designed test, such as Scott setting aside some draft blog posts to provide the control posts, or by asking a select hundred readers to not read the blog for a month and then come back and take part in an experiment.

Gets mentioned in this podcast by @DwarkeshPatel

https://youtu.be/htOvH12T7mU?si=oBxqXmpN8Jnce0eI&t=10660

@Bayesian brb setting up bounding 500k mana limit orders at 14 and 16%. In these times of chaos and uncertainty, we need at least one market that shall provide stability.

If fully AI-generated then IMO it should. If edited by a human in any way (other than basic formatting) then No.

Unsure how to vote here. It's pretty likely no such test will actually be performed, leading to a NO resolution.

OTOH, if such a test does happen, then I don't necessarily trust 30 random Scott readers or mechanical turk workers to put in all that much effort to critically evaluate the posts for real insight.

@pyrylium https://app.suno.ai/song/348b4966-0bea-4ff6-94e7-7f1599948801 another one with correct vocals. im drunk so didnt notice the first one has some wrong vocals

@Seeker It is not how well the bear dances that is interesting, but that it dances at all. This is very interesting, didn't know things like this were so easily implemented.

Still afraid of AI future

sometimes it feels like rationalists are convinced that AI will replace every job (except theirs), that every public intellectual (except theirs) is a glorified thinkpiece-monger reducible to a word-association matrix. this despite the fact that the one thing LLMs are demonstrably great at is mimicking a distinct and characteristic writing style

@pyrylium I'm going to be totally straight with you, on the thinkpeice front I honestly think most articles written in the past decade were written with the same regurgitating, volume first philosophy that gpt is great at producing.

I do not think AI will replace good writers, but a lot of published material was just trying to recombine words that passed the turing test BEFORE generative AI hit the scene. Their replacement by something more authentically inauthentic is a pretty reasonable prediction

@NeoPangloss call me a naive, maudlin humanist, but i think it's sad to ascribe a value judgement to human expression and claim that AI will only replace "bad" writers.

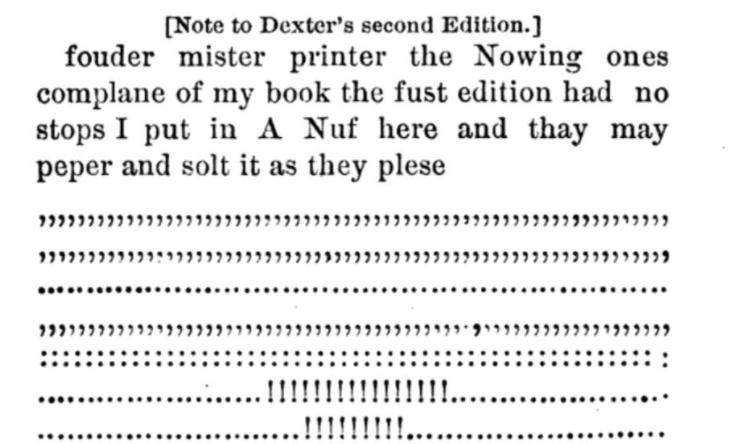

Timothy Dexter was by any "objective" measure a pretty awful writer, and yet I seriously doubt any generative text model could replicate the uncountable linguistic foibles and idiosyncracies in his autobiography (https://en.wikipedia.org/wiki/A_Pickle_for_the_Knowing_Ones), at least without explicitly being trained to do so.

what AI would, unprompted, think to publish 32 pages of completely unpunctuated stream-of-consciousness, followed by an appendix containing five pages of commas and an exhortation to "peper and solt them as you please"?

@pyrylium I think there's a misunderstanding? In my opinion:

1) There are 3 groups: good, bad, and regurgitated. Most journalism, for sake of delivering volume, specialized in regurgitated material and gpt happens to also specialize in that sort of thing. There are unique voices, certainly, but measured by volume most seems to be regurgitory.

2) AI will make it hard for unique voices to thrive too. The volume will suffocate everything, writers like Scott Alexander won't be easily replicated but they won't thrive under the new paradigm either. LLM's will not deliver quality, but it will be cost effective enough to crowd out basically everyone.

Am I bringing the communication gap?

@NeoPangloss I generally disagree with your categorization in (1). to a certain degree we can make relative judgments but they are necessarily comparative and subjective. even high-volume news-of-the-day journalism of the sort you call "regurgitory" carries, when written and edited by humans, an intrinsically humanistic perspective by dint of the histories, perspectives, biases, and idiosyncracies of those humans. Generative AI does not completely eliminate this authorial voice, but it flattens it to the LLM training corpus with a touch of input from the prompt engineer.

like you, I bemoan a future riddled by AI-generated dross, where the signal:noise ratio falls so low that all but the greatest and luckiest writers of the next generation drown in bullshit.

but unlike you, I am also sad to lose the human touch at the heart what you call regurgitory journalism. not merely because we will replace flawed but unapologetically human biases with the falsely objective biases of AI -- but because in doing so, we deny the poetic and literary spirit that can be found in any writing, no matter how mean.

how many of history's greatest writers, do you think, paid their bills (and honed their skills) writing menial ad copy or cent-by-the-word reportage in the years, decades before their breakout hit? what such opportunities will be available for the future writers of the post-LLM generation?

@pyrylium Agreed on most fronts, including your characterization of my position. Agreed that, if the great painters of history were alive today their great works would be bridged by long periods of furry hentai, so too with great writers and banal paycheck-pieces.

The regurgitory work is something I wish there was less of, but acknowledge that it is still better than LLM-flattened slop. I acknowledge that the human works that do irritate me are likely necessary and not totally without value, so I would cooperate with you to create a world where there was less AI writing, even if that meant more derivative but human generated work. I would be more happy if everything I read was insightful, original and interesting but this is not a world I think I can get no matter what people like me trade away to get it.

I would be willing to support your preferred world.

@firstuserhere insight is the limiting factor here and seems like a very high bar. Not quite "AGI-complete" as claimed below, because AGI is supposed to be broader than what can be represented in text output and includes things like driving a car. But like, if AGI had been defined in terms of text output only, "writing as insightfully as Scott" seems damned near close to AGI-complete.

That means having genuinely new insights! LLMs so far basically haven't even scratched the surface of the tip of the iceberg when it comes to being insightful. And we're talking 1.75 years to essentially superhuman insight? Scott is like a 99.9th percentile insightful writer, and I think his readers can tell.

@chrisjbillington I agree with the points you've made but am on the optimistic side of the same prediction, though not more than say 35-40%. I also think that having sample of Scott's readers judge it is a high bar, though not as high as you'd think - the general statistic tends to be 10% of the readers like a post and 10% of those comment on the post. So, the sample might be a bit broader and maybe not as good at telling whether it's 99.9th percentile quality post or a bit worse