Will an organization or government fund a project that construct an AI containment zone where we can safely create AGI and possibly Superintelligence? Some theorize that we can use AGI to independently generate candidate alignment procedures which we could verify safe using a combination of top scientists and the other AGIs in containment.

https://chriscanal.substack.com/p/the-omega-protocol-another-manhattan

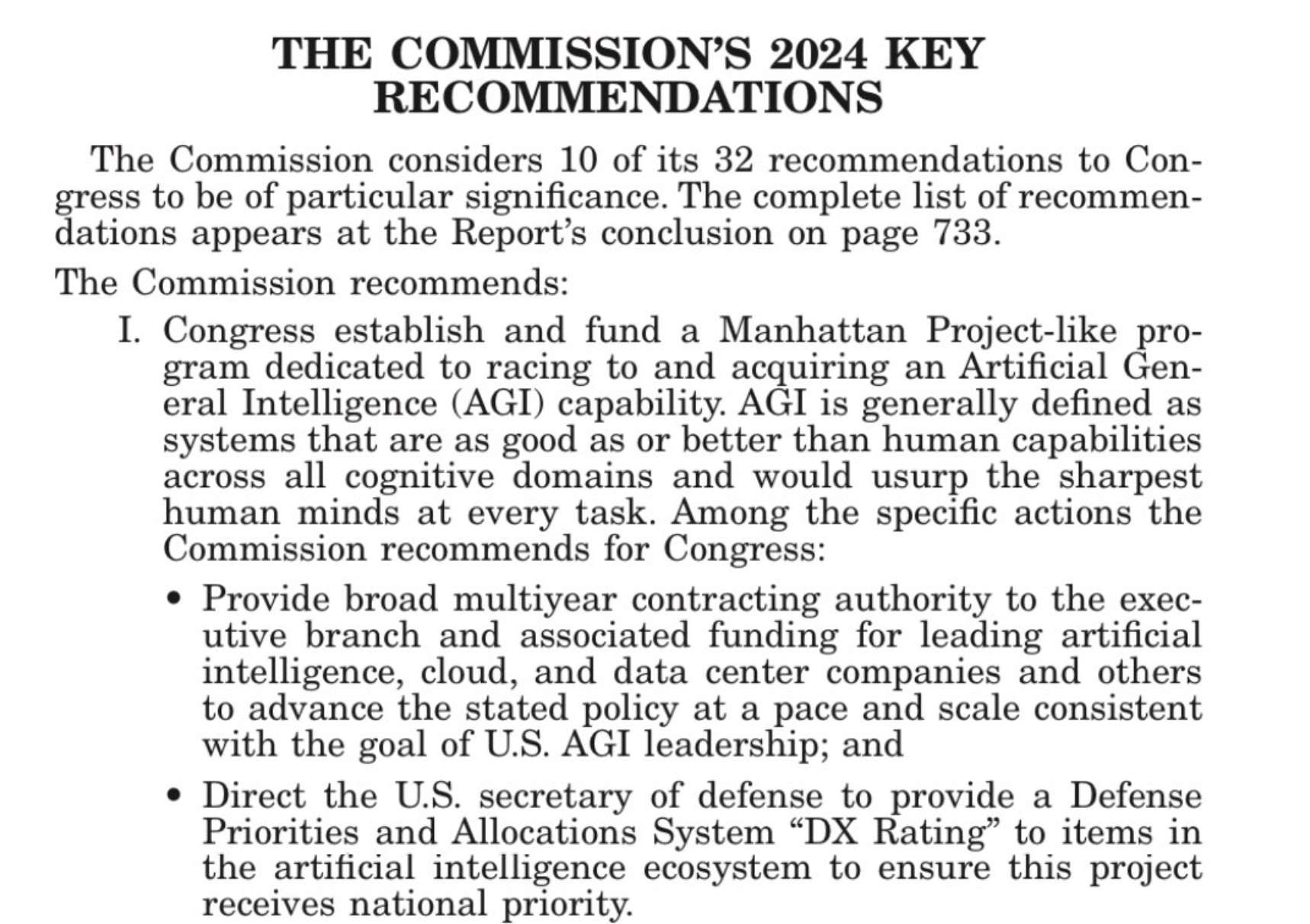

Interesting update. The US government may be trying to fund an AGI Manhattan Project, but Idk if it will get pushed through before the end of the year. My prediction timing may have been a little off :( https://x.com/deanwball/status/1858893954982232549

@mariopasquato Is it? His planning extends beyond 2029, so that's at least 60m pounds (75m USD).

I agree it's less than needed, but there should definitely be a more robust criteria proposed.

@IsaacKing Sorry for not making the resolution criteria more clear. How about we We say at minimum the project should cost 1/100th the size of the apollo project to be considered large. Apollo cost 257 billion adjusted for inflation. I think anything less than 2.57b is tiny. thoughts?

@Joshua It's not clear if "fund" means "fully fund" - and if the latter, what "fully fund" would actually mean. These projects are often started gradually, with review/analysis procedures and stuff early on, the scaled up.

Unsolicited advice: memes are funny (I laughed) but when asking for billions in funding for a project that has literally no guarantee of working out (the Manhattan project for comparison had a relatively well understood path to success, despite major hurdles) I would try to keep the communication more professional. I know I would not put memes in a grant proposal even for a small physics project for which I am asking well under 1M$. Sorry if this does not come across as constructive, but that’s not my intention.

@mariopasquato This is a good criticism, but the article isn’t really the grant proposal, it is an invitation to think about it and improve the idea.

I think the meme are ok for that, it draws attention and help to relax.

What I don’t like too much, is the reference to Musk, because I think the man is an conman, and people are way overestimating its competences. (Also, it doesn’t seem to be just me disliking him : https://manifold.markets/BokiLee/elon-must-manifold-favorability-pol)

@dionisos Sure at this stage anything goes. But eventually there is a PR risk that can be exploited by opponents to the project. Ditto for name dropping Musk

@mariopasquato The article is also terrible for other reasons, such as its reliance on meaningless signaling markets for its claim that the risk is 11%. I sincerely hope no one is taking that seriously.

@IsaacKing It would be more convincing to discuss the probability of intermediate steps (solving the Winogrande challenge, passing a full adversarial turing test…) and then talk up existential risk from there IMHO. But this is just my feeling. What matters is the audience’s feeling (who is going to fund this?). After all overestimating a threat is a time tested tradition in defense spending no? Like the “missile gap”….

@IsaacKing I think the market should be way under 11% (it is at 9% now, so it is a little better). But why is it a meaningless signaling market ?

It seems like people are still disagreeing about the actual probability, and the market reflect that (so in my point of view, it seems like the manifold community is biased toward a too high probability). Am I missing something about why it is so high ?

I think, he should at least say that these markets should be taken with a grain of salt, and that the prediction markets are still far from correctly working.

@dionisos I wouldn’t call it meaningless but it’s a market about something happening in the far future. Also if the market resolves YES any earnings are useless. Finally, the platform is dominated by people who are obsessed by AI x-risk

but it’s a market about something happening in the far future.

It can make it harder to predict, but in this case, I think it shouldn’t be too hard. (or, if it is actually hard, then we can’t really say the market is way too high)

Also it can disincentive betting on it, but I think the loan system fix a part of that

Also if the market resolves YES any earnings are useless.

Yes, but this should decrease the probability.

@dionisos Your second point is true unless people who bet their real beliefs try to overcorrect for this effect. And unless there are other payoffs (not in mana) to have a high predicted probability. For example getting to use the market to argue that real money should be granted for a project.

@mariopasquato fair criticism. I feel like nothing matters if people don't read it. And I don't think anyone is going to give me 4 billion bucks anytime soon. So I'm more just hoping someone steals the idea and runs with it.

@IsaacKing I'm open to edits. I'm trying to make a blog thats collaborative and fun to read / work on and I'm not great at it. I think I'm getting better though. My next piece is going to be a lot better. I promise! Also, sometimes I'm just hoping to do my part to shift the overton window a little... maybe I should be more rigorous with evidence. In any case, thank you for the feed back! It's a huge win for me that you took time to read the article. If you want something with better evidence, and fewer memes check out this article I wrote on the same-ish subject with my friend Connor : https://alltrades.substack.com/p/learning-from-history-preventing