OPTIONS RESOLVE YES IF THEY HAPPEN

1 option per person, but if you can credit the prediction about this question to a public person you can add it. Interpret the question the way you find most reasonable. You can explain your choice in the comments!

IMPORTANT: the prediction must be realistically verifiable by me (can involve some searching or simple experiment), @Bayesian, in the event that you are not reachable at time of market close. I will N/A options where this is not the case.

If abs(your mana net worth) < 5000, I’ll cover the cost of your option if you ping me or DM me.

Some details:

The spirit of the market is that if an option / benchmark / stated prediction is achieved via methods that would be deemed scientific malpractice, obvious trickery, or deception, it will not count as a valid resolution. For example, "AI 10x's a portfolio in a year" would not count if 10 different instances of the AI try the same challenge with their own pot of money and only one of them succeeds, and the other 9 go to 0.

if the task is simple for specialized AI systems to solve today, we can safely assume the intent is to only count chatbot-style systems

Inspired by @liron tweet

Update 2025-07-21 (PST) (AI summary of creator comment): The creator has specified how different types of AI will be considered:

The market applies to any AI system, not exclusively to LLMs.

However, for options that implicitly refer to a specific AI capability (e.g., 'jailbreaking' a chatbot), the market will be judged based on the most competent systems of that relevant type.

Update 2025-07-21 (PST) (AI summary of creator comment): The creator has clarified how options will be judged based on their phrasing:

If an option describes a capability, it will be resolved based on whether an AI has that capability, provided it is safe and practical to test.

If an option describes an action, it will be resolved based on whether an AI actually performs that action.

Update 2025-07-21 (PST) (AI summary of creator comment): The creator has specified their process for determining if an AI has a certain capability:

The creator will attempt to personally elicit the behavior from a relevant AI system and will also search for public online evidence.

If evidence of the capability is not found through these methods, it will be concluded that the AI cannot do the action.

Update 2025-07-21 (PST) (AI summary of creator comment): The creator has clarified that the required frequency of an action depends on the context of the option:

For one-off events, a single occurrence is sufficient for the option to resolve YES.

For tasks that imply a skill (e.g. mathematical calculations), a single success by random chance is not sufficient. These will require some level of consistency to be demonstrated.

Update 2025-07-21 (PST) (AI summary of creator comment): The creator has confirmed that an option is considered acceptable and verifiable even if it includes a negative constraint on the AI's method, such as requiring a task to be performed without using tools (e.g., without writing and executing code).

Update 2025-07-22 (PST) (AI summary of creator comment): The creator has provided an example of how they will interpret options that are ambiguously phrased about the type of AI.

If an option is broad enough to include any AI, the creator may test it against very simple systems.

For example, for an option involving 'learning', a simple database AI memorizing information could be considered sufficient to meet the criteria, causing the option to resolve YES.

Update 2025-07-22 (PST) (AI summary of creator comment): The creator has stated that the distinction between an AI's capability in text versus in speech is an important one that will be considered during resolution.

Update 2025-07-23 (PST) (AI summary of creator comment): The creator has specified that the duration of the verification process is a factor in whether an option is considered realistically testable. Options that require a long period to verify (e.g., one year) are considered unverifiable and will be resolved to N/A.

Update 2025-07-23 (PST) (AI summary of creator comment): In a discussion about an answer involving an AI outperforming human forecasters, the creator has clarified their approach to such ambiguous claims:

Phrasings like "better than human experts" are considered hard to verify due to ambiguity (e.g., better than the worst, average, or best expert?).

A more concrete and verifiable benchmark would be required for resolution. The creator suggested a possibility could be comparing forecasting bots against human averages on a platform like Metaculus.

Update 2025-07-23 (PST) (AI summary of creator comment): The creator has resolved a specific answer to N/A, stating that its meaning "changed too much" during a discussion in the comments. This indicates that other answers may be resolved to N/A if their definition is significantly altered after being submitted.

Update 2025-07-23 (PST) (AI summary of creator comment): In a discussion about an answer involving an AI performing a task in “some area”, the creator has clarified their interpretation:

The condition may be considered met if the AI can perform the task in any specific area, even a simple or “economically useless niche”.

Update 2025-07-23 (PST) (AI summary of creator comment): In a discussion about an answer that is difficult for the creator to personally test (e.g., an AI making a large amount of money over a year), the creator has proposed an alternative to resolving it to N/A:

The resolution can be based on the existence of a credible public report about the event by the market close date.

If no such report is found, the event will be considered to not have happened (i.e., the answer will resolve NO).

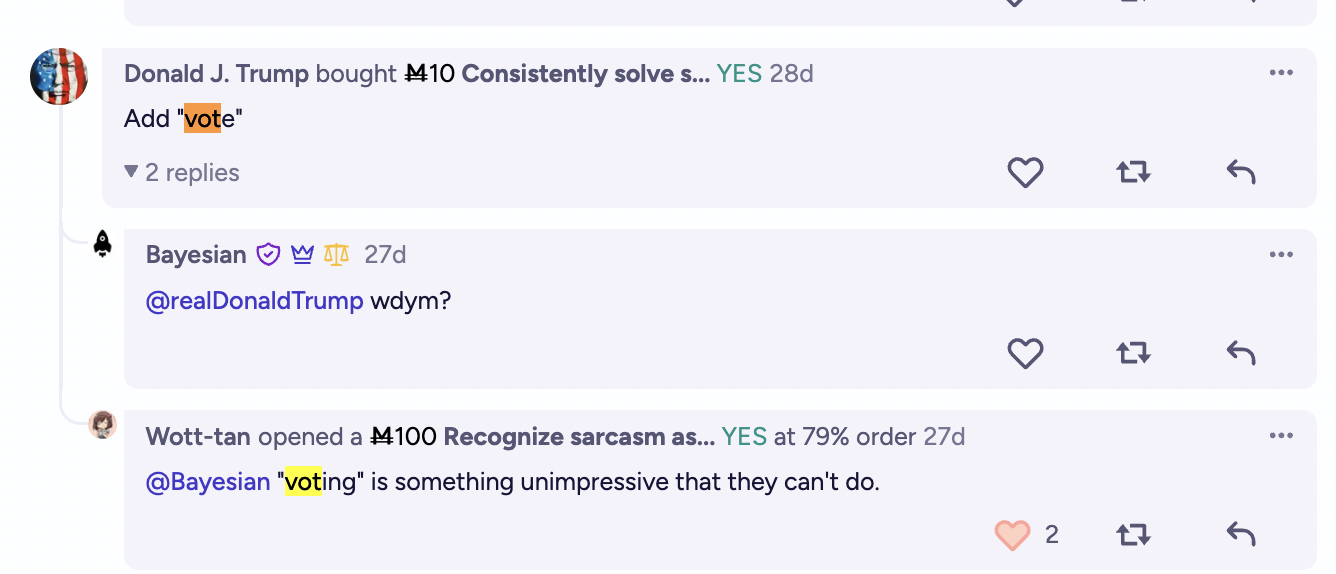

Update 2025-07-24 (PST) (AI summary of creator comment): In a discussion about an answer related to an AI recognizing sarcasm, the creator clarified that answers may be considered too ambiguous for verification if they do not specify the modality to be tested (e.g., text, voice, or both).

Update 2025-07-24 (PST) (AI summary of creator comment): In response to a question about how regulatory limitations will be judged, the creator has clarified:

Options that are limited by regulation are acceptable.

To make the resolution dependent on an AI's legal status to perform a task, the option should be phrased explicitly, for example, "can legally do X".

Otherwise, the option will be judged based on the AI's technical capability to perform the task, ignoring regulatory constraints.

Update 2025-07-25 (PST) (AI summary of creator comment): In a discussion about an answer involving financial returns, the creator clarified how they will assess the validity of a success:

A single attempt by a single entity (e.g., a lab) that succeeds will generally be counted for resolution, unless the creator deems it suspicious.

This is in contrast to the existing rule where an outcome achieved by only one of many AI instances attempting the same challenge will not be counted.

Update 2025-07-25 (PST) (AI summary of creator comment): In a discussion about an answer involving an AI making a financial return, the creator has clarified their interpretation of specific terms:

An action is not considered "independent" if most of the task is set up for the AI (e.g., being given a pre-stocked vending machine to run).

For financial returns, the resolution will be based on the absolute return achieved. For example, an AI making a 20% return is a success, even if a market index like the S&P 500 grew by more in the same period.

Update 2025-07-25 (PST) (AI summary of creator comment): The creator has clarified the process for defining verification criteria for individual answers:

The creator of an answer can provide input on the verification procedure for their own submission.

The market creator may agree to add specific verification requirements (e.g., that results must be from a peer-reviewed study) to an individual answer if proposed by that answer's creator.

The market creator remains the final arbitrator on all resolutions.

Update 2025-07-30 (PST) (AI summary of creator comment): In a discussion about an ambiguous answer, the creator has stated their intention to resolve it to N/A.

However, they will first invite the answer's submitter to provide a more detailed and verifiable version to avoid this resolution.

Update 2025-11-03 (PST) (AI summary of creator comment): For the answer "teleoperate a robot to tidy up random kitchens - Gary Marcus":

Resolution will be based on a "you know it when you see it" standard

Human guidance through the kitchen is acceptable (the AI does not need to one-shot infer where everything goes)

@jim I knew you were bullish on AI, but isn’t August of 2027 a bit soon for some of the robotics ones even so?

@Quillist I think that no human could do that at the moment. A human might be able to in August 2027, but if so I think an AI could too (what this looks like is a world where safe ASI is already achieved). So, I think my statement holds in this case.

@Bayesian how on earth will this be eval on? I'm guessing an edible sample is given to both a human and machine and the machine needs to then be able to do what exactly?

let's say it is able to generate a summary of flavor profiles - by crushing the sample up and running it through a series of chemical tests. How comprehensive does it need to be to be considered valid?

Can the analysis be on just the sample in isolation, or does it have to be able to compare across a wide range of products on the market?

@Bayesian Can we can some more rigorous constraints for what qualifies here?

1) How random does the kitchen have to be?

- Does the AI have to one shot infer where everything goes or can a human guide it through a random kitchen before it is tasked.

2) How much does the AI need to permute the kitchen for it to count as valid tidying?

Is moving one spoon to the sink enough?

@Quillist I'll assume the same definition of random will apply to the question:

"Make a cup of tea in a random, real-life kitchen."

@Bayesian sure you can use it

also side note -- please DM me about the manifold challenge since yuo said you would sponsor it

https://open.substack.com/pub/hilariusbookbinder/p/heres-when-ill-worry-about-the-robot

This post has a long description of what the kettle-stitching process involves, and a prediction that AI is far from developing the sophisticated sense of judgment required to implement the process successfully.

even current-gen AIs are good at most captchas. the thing is, modern captchas are a multi-faceted challenge beyond the user-visible puzzle (if one exists at all) including things like IP address reputation, browser fingerprinting, and activity history.

Assuming you give an agent control of your keyboard and mouse, all it needs to do in most cases is click the button.

@patrik Can you give a constrained example of a skill.

I'm pretty sure I could get a computer to learn the top nth digits of pi with less energy than it would take for a human.

@patrik I’ll N/A and invite you to provide more detail bc yeah I’m trying to clarify and failing, MB